I’ve been somewhat cautious about the secular millenarian AI cult. I’m a pragmatist and empiricist at heart when it comes to technology. Some specialist algorithms have made spectacular gains in recent years, good enough for everyday use, like better-than-human transcription and language translation. I enjoy having Super Cruise drive on the highway for me. All of these operate based on human input or under heavy human supervision.

Up until three months ago, generative AI tools, like ChatGPT, were interesting toys, reliable only in certain limited contexts. They were theoretically capable for a lot of business communications related to marketing, meeting summaries, and the like.

However, I think that says a lot more about the inefficiencies of how most businesspeople operate than about the technology as of January 1, 2025. Since I have always run business meetings towards coming to a resolution and action items following any discussion, and those conclusions were too critical to trust to an AI, such tools seemed superfluous1. Good summaries are useful for those, admittedly most, who talk for entertainment purposes without specifying objectives clearly.

I am, however, quite interested in tools that can make my search for better mental models more efficient. Early this year, I had high hopes for the o1 reasoning model, one of the first large language models (LLMs) that would attempt to check its own work before spitting out an answer. I quickly became disenchanted, however, in seeing it hallucinate sources and quotes. With some prodding, it could often correct its errors, but practical usefulness remained limited.

On April 15, 2025, OpenAI released its o3 model, only available on paid plans. This model, like o1, features a recursive reasoning component that allows it to correct (most) hallucinations, but more importantly, can search the web to gather information directly. Unfortunately, the training process of LLMs introduces significant information compression into its structure, so outside of the most general, well-documented knowledge, these compression artifacts often produce information that, even if directionally correct, is incorrect in its details; they might arrive at the appropriate conclusion, but cite non-existent sources. The ability to search live information on the Internet and use that as its authoritative sourcing, rather than its training data, is a huge game-changer. More importantly, it allows users to directly verify its conclusions.

Upon experiencing o3’s capabilities over time, I noticed a behavioral change in my searching activity, going to o3 first for many, and now most, queries instead of Google. Strangely, I think this is partially because Google has gotten worse in its use of AI-based summaries. About 18 months ago, aside from topics touching aspects of political correctness, Google was the best it had ever been, surfacing useful results by broadly interpreting queries. The workflow of an AI interpreting a naive non-expert user’s queries and surfacing the most relevant results is an awful lot like today’s o3!

Google, however, had a big problem. GPT4, released in March 2023, was quickly polluting the web with almost undetectable spam, lowering the usefulness of the results it did surface, while many users shifted to the shiny object of ChatGPT for queries, despite its inaccuracy.

Scared by ChatGPT, Google quickly implemented its own AI summaries, which are often quite wrong, and waste time in that a savvy user must click through and verify the facts at the source, instead of being served the source with a snippet (to quickly assess relevance) like on old Google. Right now, AI can be good or fast, but not both, the implications of which I’ll discuss momentarily.

Whether I like it or not, old Google probably isn’t coming back, and o3 is now better for nearly every even semi-complex search.

Using o3

The o3 model is best conceived as a better search engine, as much of a breakthrough that Google was over previous incumbents in 1998. It’s right at least 95% of the time and can save significant effort collecting disparate facts across a traditional search engine’s results. Most importantly, at its current functionality, it’s a tool I will gladly pay for, much more than $20 per month if forced to, and I would be sad to revert to old-style Googling.

Unfortunately, this is subject to several caveats that, at the current state of the technology, augment the effectiveness of savvy users while harming average users:

o3 is kind of slow, in that to provide good summaries and research it must a) retrieve multiple sources from the web, which takes 20-30 seconds, and b) analyze those sources, questioning its reasoning in a loop. A good answer from it can take 2-3 minutes, longer if in “deep research” mode. This is much faster than doing it myself. However, the existence of faster models like 4o, and Google’s AI summaries means average users, not understanding the technology, will get bogged down by impatience and be subject to more frequent errors. The savvy get functionally smarter, the average user functionally dumber.

Google is not irrational to serve up fast, dumb AI, as most users are super impatient. Never underestimate the irrationality of consumers! One of the reasons Amazon is a dumpster fire for bad actors is that Amazon knows users care most about speed in loading the Amazon website, so Amazon runs its massive catalog on a NoSQL database optimized for this. But on the back end, this means the most basic queries to identify scammy activity take days to run in batches, leaving Amazon constantly playing defense instead of proactively preventing scam activity.

o3 is only as smart as the sources it can access, and it will fake it if it can’t find good sources. The more obscure the topic, the more likely a hallucination. For example, when researching bureaucratic procedures related to purchasing for government entities, I’ve seen it completely fabricate details of vendor applications. When challenged, it will say it made it up for illustrative purposes, when expected behavior would have been for it to just admit it couldn’t find the information.

Like all flat-priced subscription “chat” products, which are operated at a loss for power users, computing resources are carefully metered. This is either to control costs or meet user expectations of speed. It’s much better to ask one simple question at a time.

But even that’s difficult, because after an extended chat it tends to get dumber, a phenomenon called “context rot.” It’s important to remember that it has only a very basic reasoning capability, and once that’s exceeded, it defaults back to dumb LLM next-word guessing. The best practice for research is to frequently start new chats before it gets dumb, especially if the direction of the query changes significantly.

And as impressive as it is, the user must remember how dumb it can be. As a general intelligence, LLM technology, despite almost infinitely more raw computing power, is still very bad at chess, for example, inferior to specialist algorithms developed in the 90s. In one of my research projects, in calculating a population proportion, it incorrectly reported that 55 divided by 2.4 was approximately 6, not approximately 23. Its response: “Thanks for catching that—I should have done the division instead of eyeballing.” I’m a math guy and almost missed this, because it’s so convincing rendered in that gorgeous LaTeX font.

So far, it seems most useful for bringing a savvy user up to speed on general topics, doing basic research for which copious information already exists, and sometimes generating useful hypotheses in more obscure areas. The best mental model for o3 is that it is your genius, idiot savant buddy with a schizophrenic comorbidity who is mostly right but occasionally gets things entirely wrong. Nothing it says should be relied upon until verified.

I’m most impressed with its ability to synthesize empirical data across vast corpuses of research literature. It can’t generate “secrets” or genuine innovation per se, yet, but there’s enough irrationality in the world such that many empirically verified facts are in effect open secrets.

This has to be coaxed out of it by the right lines of questioning (and its obsequiousness means proper queries matter a lot), and the user has to be rational enough to trust evidence over gut hunches, but it seems like smart users can synthesize true expertise across a large number of domains, building mental models that are better than the competition, without years of education in each specific field. It seems the ultimate fulfillment of Scott Adams's “systems vs. goals” framework, for those few inclined to it.

The Downsides of AI

Strangely, its positive capabilities are better than its negative ones. Two LLMs, for example, recently won gold medals at the International Math Olympiad. Its peaks are somewhat unbelievable. And it should be noted that this recent accomplishment was done with private models not available for public use and verification; it’s entirely possible, maybe likely, that Google and OpenAI somehow cheated on this. These are advanced high school math problems for which expert mathematicians, of whom they have many, could help the AI. It is a well-known tactic in Silicon Valley to semi-fraudulently simulate software functionality under the advice “do things that don’t scale” to impress the market and worry about figuring out the technical side later.

In addition, reliable work is more than just positive accomplishments, and arguably primarily about avoiding screwups. The jagged edge seems to be high achievement but low reliability. Hence, AI can (maybe, if we believe them) win international math competitions competing with 145+ IQ humans when the stakes of failure are low, but cannot yet compete with 80 IQ humans in driving cars2. It also cannot yet replace a 110 IQ manager at a convenience store.

Unfortunately, the apparent competence and confidence of AIs is a huge trap for midwits. I know rich frat bros, smart enough but not geniuses, who think they no longer need attorneys because they believe LLMs are competent to review multi-million dollar contracts. But even when you hire an attorney now, if they’re dumb enough to use AI credulously, including, ironically, ones representing a major AI company, you might get sanctioned for submitting court briefs with hallucinated cases. The online marketing crowd is also an eager early adopter, filling the Internet with more inbred AI slop that may work for a time, but will quickly tire and frustrate consumers.

AI also seems to be a huge risk in education. Accurately assessing learning is inherently difficult at scale. A one-on-one tutor can do it, but classroom teachers serving many students have always had to resort to shortcuts involving homework. This works well enough if students don’t cheat, but cheating on these imperfect, non-realtime assessments has always been possible, as they are indicators of learning if recalled without aid but not inherently difficult if one has the source available. Even “cheating” has educational value if it involves a student physically opening a book and interacting with its contents to find answers; the book’s physical aspects, such as locations on a page, can help tie learning memories together. AI, however, lowers this effort to nearly zero and allows students to cheat without hardly any memory formation.

I think an accurate description of the future is that AI will augment the capabilities of those who learned to read, think, and write critically before the advent of AI; for those educated with available AI, it will become a crutch stunting intellectual formation. Today’s students face an impossible choice: work harder, learn more, avoid AI, and make worse grades, or use AI to make better grades. Most education is fake anyway, so what does it matter?

It seems schools, if they care about being effective, are going to need to invest more in faculty and instruction, falling back to assessing students with oral exams, proctored exams, or in-class written assignments blue-book-style, or else reconcile themselves to certifying students pretending to learn while they pretend to teach. Given the abject laziness I’ve observed in college faculty, their fear of student feedback if they dare hold students accountable for learning, and the pressure to have their not-so-bright students demonstrate “mastery” on exams and assignments for accreditation, most would rather not know how much students fake learning with AI and will simply look the other way.

This was, unfortunately, already the case at most non-selective universities, but it will be a tragedy for whatever margin of talented students who might only be motivated to learn when challenged; the “spark” that every teacher dreams of lighting in a capable but incurious student will increasingly be smothered by easy AI cheating. The 5-10% of students who are naturally intellectually curious and self-motivated to learn will be fine, AI or not, as they enjoy reading, learning, and writing for their own sake. But before AI, most students were already using SparkNotes, Chegg, or other aids to avoid reading or thinking, and badly plagiarizing others’ writing, and LLMs simply enable this more easily and without reliable detection.

Perhaps AI simply makes it more obvious that our forebears3 were correct in thinking that, beyond basic literacy and arithmetic, the traditional studies of high school and college were wasted on most of the population. Given that few of today’s college graduates possess 19th-century levels of competency then associated with an 8th-grade education, this seems true. Bryan Caplan makes the argument that most modern education is net-negative in adding value to society, serving only as a costly signaling function arms race that makes life increasingly miserable for upper middle class kids, even before AI prevented most students from learning anything.

AI and Jobs

One good rule of thumb is to never believe anything a CEO says about unpopular decisions like layoffs. The job market is certainly soft right now, but that trend began in 2023 before the major breakthroughs in AI of the last 18 months.

My gut as an operator is that AI, while becoming more interesting, isn’t quite yet able to replace humans, only augment them, at best saving 50% of value-adding labor in very specific applications requiring expert validation and calibration4. CEOs would have incentives to claim AI is replacing jobs, however, because it makes them seem fluent in a broader enthusiasm and, more importantly, provides a pretext for cutting jobs without being blamed. These trends come in waves as corporations move in packs to avoid being the singular focus of ire.

The better explanation for job market softness is overhiring during the previous, post-Covid easy-money regime. The market rewarded top-line and headcount growth when interest rates were low. When money is tight and inflation causes costs to rise, businesses must unwind these excess hires to restore expectations of earnings growth. Since much of the 2021-22 hiring spree was focused on non-meritorious, DEI-type hiring practices, this makes broad, rather than targeted layoffs, along with new hiring freezes, most efficacious at avoiding liability. You can’t just keep the good ones if there’s disparate impact5, and liability is much harder to avoid in firing and promotions than hiring.

One other longstanding thesis of mine is that most companies have significant margins of “BS Jobs” they have been slowly jettisoning since at least the 90s. One troubling development for mediocre workers is that, since Covid, I believe we have seen a slow decoupling of managerial compensation from headcount relating to status. The rise of boutique firms, particularly in private equity and venture capital, has created a class of well-compensated, white collar elites who operate semi-remotely, with few employees.

If more mainstream companies adopt this model — that elite workers can be compensated highly despite a small number of direct reports, or else some of them will bolt for employers that will — then middle managers will be less motivated to overhire in the future to justify their salaries, and more motivated to cut costs and claim even fake or marginal use of “AI” to show they are as productive as a team of 20 were formerly. “I manage a team of 20 AI agents” will be the new claim. And this will be easy, as every software tool any professional uses has some AI gimmick now, however useless, marginal, or simply a rebranded old-school algorithm.

If they can get their bosses to justify six-figure salaries without a redundant “team” attached, so much the better for everyone. People, after all, are a hassle, and the lack of them makes it easier for high-capability workers to disappear from the office and work from a beach6. Who knows what the future holds for AI actually replacing people, but if AI hype becomes the smart-sounding pretext for cutting jobs while expanding margins and holding onto fat management-tier salaries for those who survive the cuts, it’s watch out below for broader middle-class employment.

The lesson for new grads? The most reliable jobs are going to be those where you’re protected by a licensing cartel that will enforce your interests in the state legislatures. Even if AI replaces many jobs, lawyers will want someone to sue, which means we’re still going to need licensed accountants, physicians, dentists, speech therapists, and the like to sign off on whatever the AI is doing. I expect more licensing cartels for white collar workers and more unionization for blue-collar workers in the future as an unspoken way to insulate the middle and skilled working class from replacement by AI, with AI’s errors used as a pretext to protect the public. Keeping people in respectable work, even if they’re just watching the robots, is better than income redistribution schemes.

The Future of AI

I wanted to get this column into the world as a time capsule of my thoughts before the expected August 2025 release of GPT5. For entertainment purposes only, here are some predictions:

Productivity gains from AI will be disappointing in at least the short term (18 months). The delayed release of GPT5 must mean hard marginal declines per unit of development in AI technologies at the moment. Acceleration is slowing; the third and probably the second derivative of innovation is negative, at least for LLMs. Now this is always true of any technology that matures, but the pace of change can give clues to the ultimate terminus.

Apple’s continued reticence on incorporating AI into its products is due to appropriate caution, not incompetence. This is the best-run, most profitable tech company in the world, which makes the world’s best in-house processor hardware, and to think they are clueless about LLMs is fantasy. That it’s useful to me as a research tool doesn’t mean it’s ready for production in many applications when people expect simple, reliable functionality. Apple is appropriately cautious about protecting its brand reputation as reliable computing for people too busy to tinker with not-quite-baked tech. It does use AI where it works reliably, for example, for summarizing messages and predicting typing, which now saves me a bit of time each day. What AI can’t do reliably yet is manage a calendar or email conversationally like a personal secretary. That Apple promised and then missed delivering on the more advanced Siri features is further indication of slowing innovation; they reasonably linearly extrapolated the rate of progress in reducing errors to tolerable levels from the time of their announcement, but progress must have stalled. When AI can do these tasks reliably, Apple will incorporate it. With their gigantic cash pile and complaints from investors who perceive them falling behind in the AI race, they must further see that no acquisition target is any closer to solving these problems than they are, or else they would have already closed on one.

All of the AI giants are going to need to find a way to incentivize humans to continue writing expert-level content, so that the LLMs don’t eventually choke on their own vomit. Much of o3’s internal reasoning, I’ve noticed, is evaluating and discarding sources likely contaminated by spam. OpenAI will likely, if they haven’t already, develop their own web spider and web index, along with highly secretive AI detection algorithms to avoid citing its own or other LLMs’ output in the wild. Human-written content will be promoted in cited sources, rewarding experts with at least some credit and attention for their efforts. This is especially true with new or updated knowledge, as LLMs still train in batches with a cut-off knowledge date, and to keep the models (and their web-reference output) fresh and accurate, they will have to find ways to filter out AI slop and reward human creators.

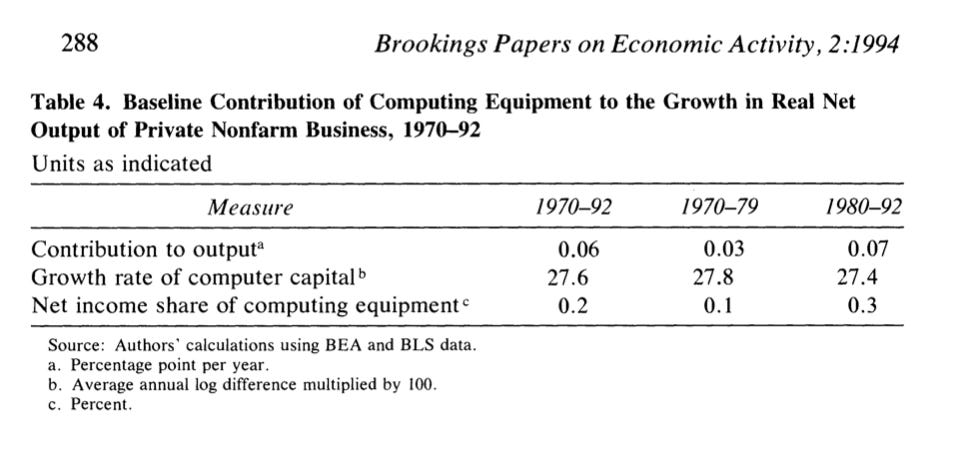

Those productivity gains that do occur might be ephemeral in economic measures to the extent that they simply enhance CYA paper-pushing and zero-sum marketing activities. The most common economic pattern is that supply creates demand, so AI could just exponentially increase the number of TPS reports without adding value by cutting jobs or serving customers. If a company doubles its social media output with AI assistance, it doesn’t make the economy as a whole wealthier. Cal Newport of Deep Work notoriety cites this Brookings study showing that the entrance of the first computers into offices did very little to enhance productivity, net of costs (less than 1/10 of a percentage point annually during a period of 2-3% annual growth):

I can vouch for this, in that my first professional job at a Johnson & Johnson factory involved a transition from paper records to SAP software, a project which both predated and continued after my term of employment. They spent millions and millions on consulting contracts, missing the deadline by several years (the consultant hung around the factory with an assistant and never seemed to get much done), and after two years before I quit they still had vaporware, whereas the sweet Appalachian lady who checked and validated paper files manually for me, Pearl — with 20 years of experience, sharp as a tack, and knew the entire factory floor to ceiling — could locate records within minutes upon request. I assume they finished at some point after I left? The result was not a more productive factory (Pearl made, in 2002, maybe $20 an hour plus benefits, much cheaper than software), but a system where execs in New Jersey could theoretically pull reports they’d never be interested in reading using software they didn’t use often enough to learn well, when Pearl could grab anything they needed and fax it in 15 minutes, and might have anecdotal details in her memory that would be useful to any investigation. But hey, for some ambitious middle manager with an MBA, stacking cash selling overpriced sutures under one of the world’s most valuable trademarks is boring compared to being a “thought leader” wasting shareholders’ money on software boondoggles that continued long after they’d been promoted for their “vision.”

Where AI is important, the most productive sectors of the economy are already using specialized AI that does a better job than LLMs. Chess, where LLMs continue to struggle, was “solved” in 1998 when Deep Blue beat the best human chess player alive, and no human has been competitive with computers since. Every energy company is using AI to get better at finding oil. Every biotech company is using specialist AI to search for candidate compounds. Really smart people are already incentivized to use the best technology where there’s money to be made, and it’s getting better. These are real innovations that are to some extent already baked in, and will tend to be ignored compared to the consumer-facing technologies accessible to non-specialists. And it seems LLMs will be perpetually behind these specialist AIs. It’s hard to imagine, for example, feeding the latest LLM a map of the Permian basin and having it do a better job identifying candidate drilling sites than the specialized machine learning already used by petroleum engineers at Chevron.

All that said, the state of current technology is exciting and useful. I’m sure it will continue to improve, augmenting curious minds with a wealth of information.

I also believe discussions, for appropriate creativity, should have a certain laxity and frankness such that the last thing I would want is to have transcripts floating around for lawyers to get ahold of and distort my meaning. I’m not a subscriber to the Bill Belichick school of ethics — if you ain’t cheatin’, you ain’t tryin’ — but sometimes brainstorming outside the realm of morality is useful for finding not ethical shortcuts, but shortcuts that are, in fact, ethical. If you’re the kind of person who’s comfortable with robots transcribing everything you say, you’re kind of a tool.

This is demonstrated in the most successful self-driving system, Waymo, resorting to expensive, superhuman LIDAR sensors. Tesla’s effort, which relies only on visual information from cameras akin to human eyesight, is much further behind.

The theologian Robert Dabney, in writing against public education, claimed that the half-educated man is worse than the man without any education, for the latter at least knows his ignorance and will look to his betters humbly for guidance, whereas the former thinks he’s educated and proceeds without caution into abject foolishness. Fast, dumb AI catering to the impatient risks creating a society of not half-educated but entirely pseudo-educated people whose self-appraisal of what they know will reach historic lows proportional to what they actually know.

For the record, I disagree with Dabney’s extreme position on this. The downside of creating masses of half-educated people was more than offset by enabling the systematic discovery of formerly underdeveloped talent. The IQ distribution, while highly heritable and correlated with parental income, is wide enough that most smart people are not born into families who can afford private education, making some public funding (but not necessarily public operation) of education a decent investment.

Current levels of funding, though, are excessive, produce negative marginal value, and could be cut by ~60%, which sounds extreme but just takes us back to 1960, which was sufficient to get to the moon. Caplan finds negative social returns to education for all students past 8th grade after adjusting for ability, which seems to prove too much, but this may be due to aggregating all students and degree types together. I highly doubt that liberal arts degrees these days, except at a few elite, traditional programs like St. John’s College, help anyone become a better thinker or writer; however, this doesn’t seem to be the case for more technical fields. Does he expect Boeing to IQ test 8th graders and apprentice them, starting with Algebra II, into aerospace engineers?

A caveat to this is that the more current AI technologies replace your labor, the less value-added your labor is. To paraphrase Jeff Foxworthy, if AI as of mid-2025 can currently do most of your job, you might have a BS job.

Yes, the Trump administration did eliminate federal enforcement of disparate impact employment litigation, but it’s still hazy under private civil suits. Defensible disparate impact is easier on the hiring side, say with a reasoning test or GED requirement, but harder on the employment side, where employee performance evaluations are inherently more subjective; a straightforward discrimination claim can have more legs in court, and thus extract a settlement.

We are rather odd historically in that our elite work 60-hour weeks while the poor are obese and idle. The most talented white-collar workers are rational to demand more flexibility given the decreasing marginal utility of wealth and productivity per hour worked.

"I think an accurate description of the future is that AI will augment the capabilities of those who learned to read, think, and write critically before the advent of AI; for those educated with available AI, it will become a crutch stunting intellectual formation."

Came to this same conclusion at Dinner with a friend/supporter last night. Of course we are both middling intellect lawyers, so what do we know?

More like Gay-I